PARASOL: Parametric Style Control for Diffusion Image Synthesis

Abstract

We propose PARASOL, a multi-modal synthesis model that enables disentangled, parametric control of the visual style of the image by jointly conditioning synthesis on both content and a fine-grained visual style embedding. We train a latent diffusion model (LDM) using specific losses for each modality and adapt the classifier-free guidance for encouraging disentangled control over independent content and style modalities at inference time. We leverage auxiliary semantic and style-based search to create training triplets for supervision of the LDM, ensuring complementarity of content and style cues. PARASOL shows promise for enabling nuanced control over visual style in diffusion models for image creation and stylization, as well as generative search where text-based search results may be adapted to more closely match user intent by interpolating both content and style descriptors.

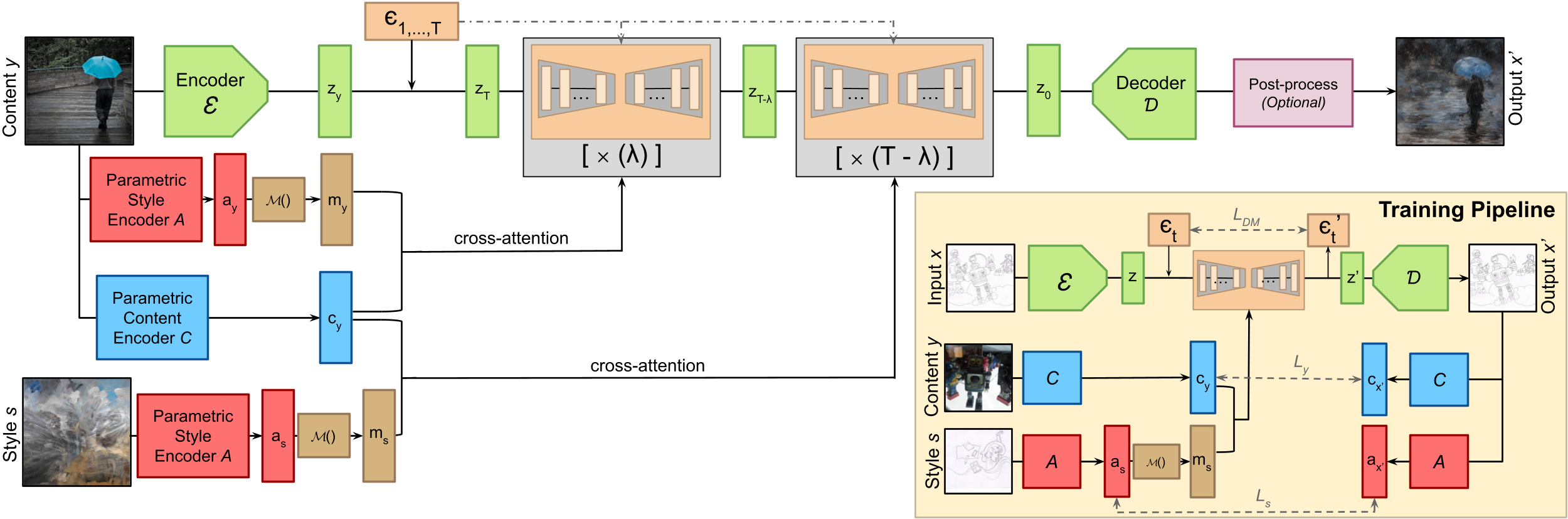

Training and Sampling Pipeline

The backbone of PARASOL is a Latent Diffusion Model that is adapted for encouraging style/content disentanglement and enhanced user control over the output.

Training Data

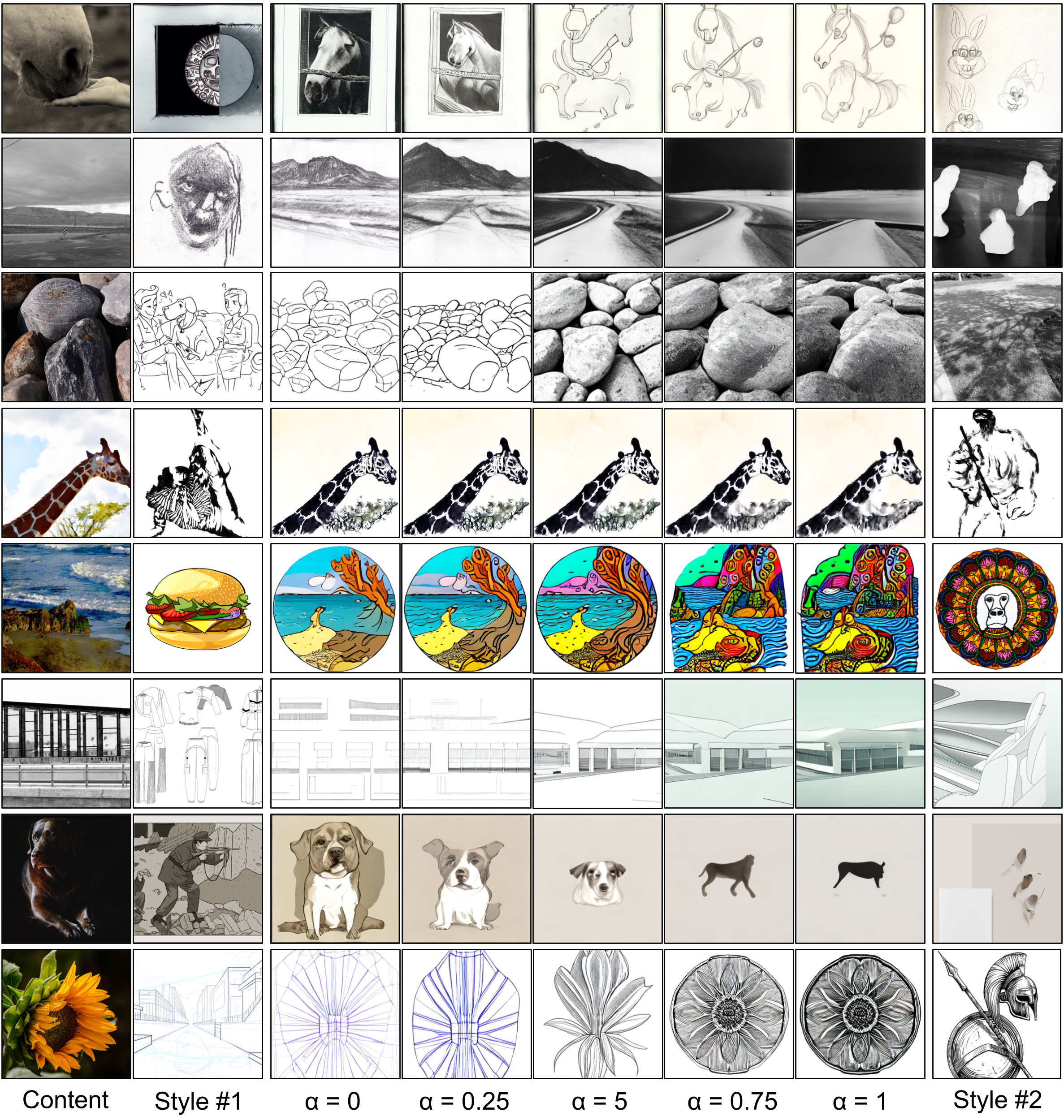

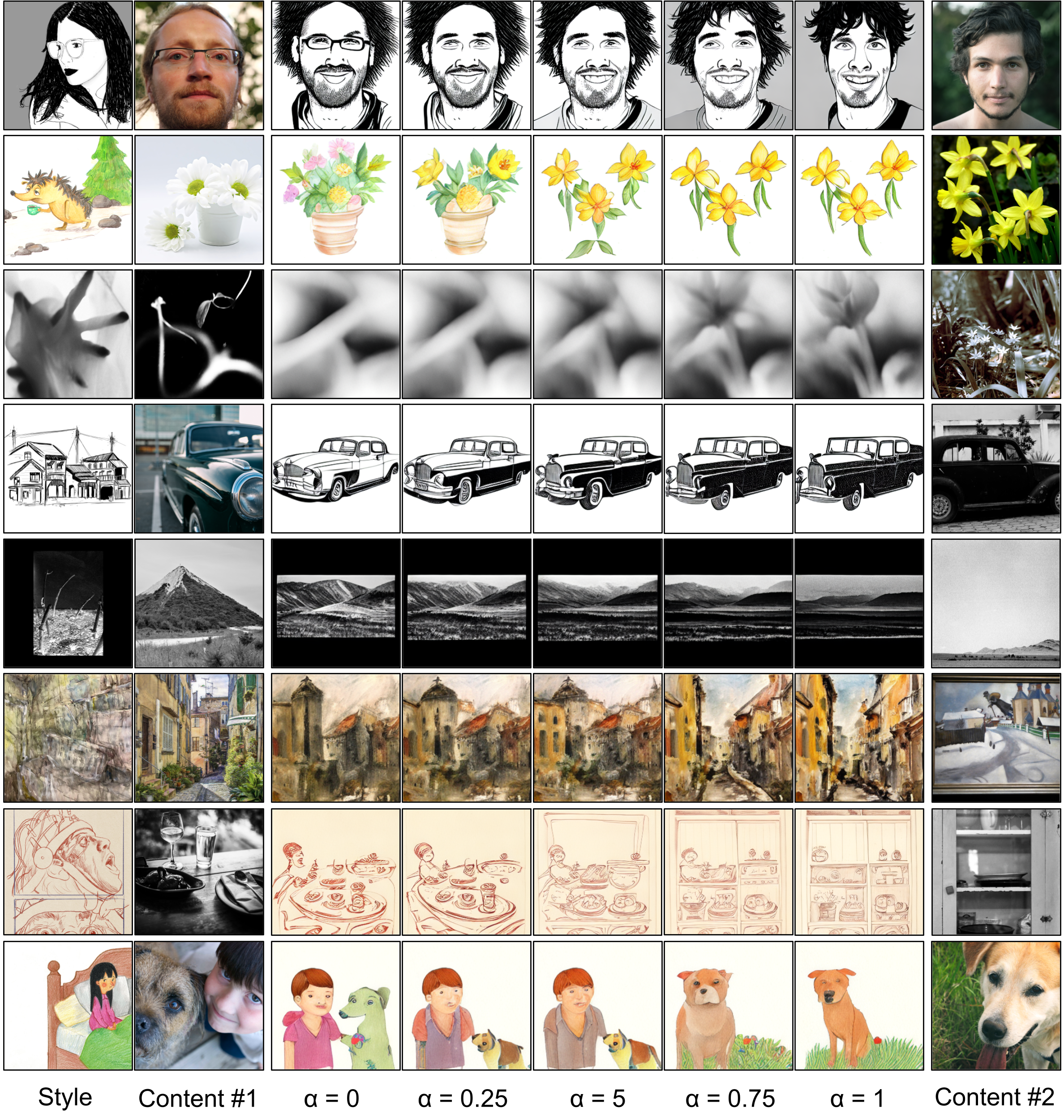

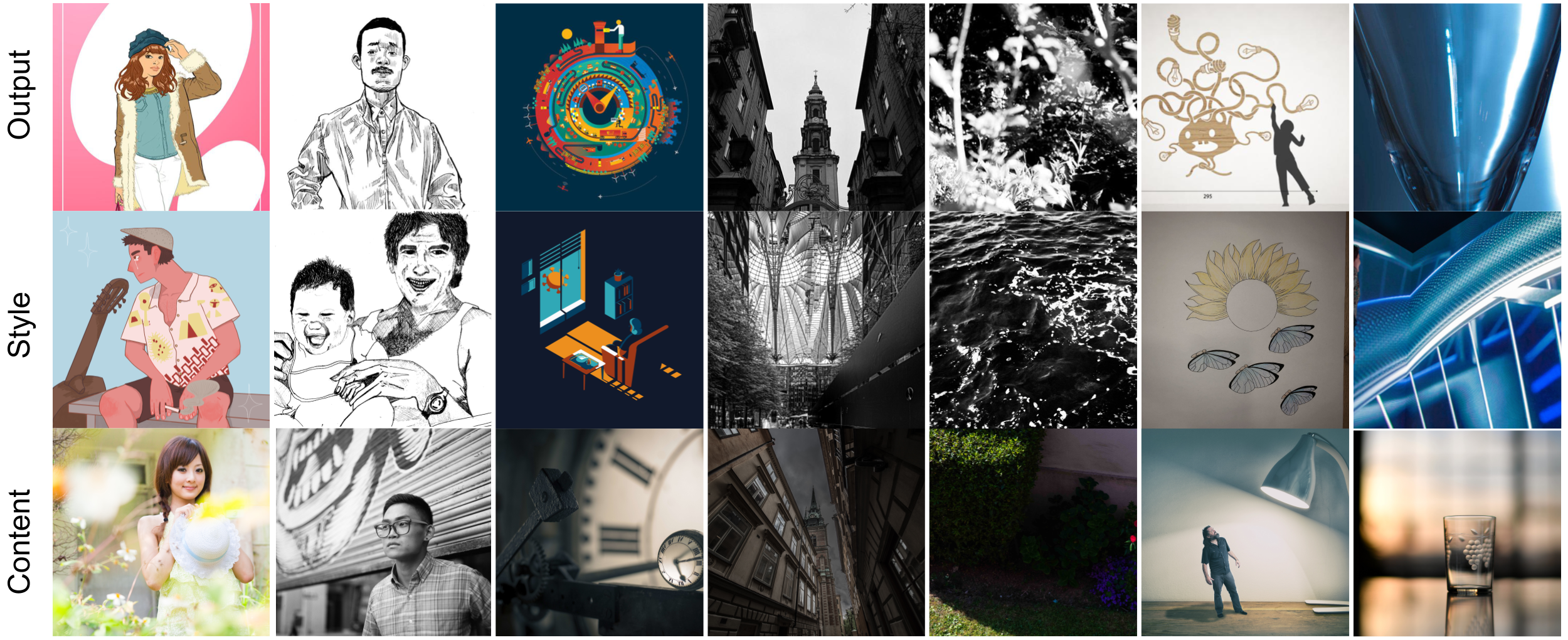

We generate triplets (output x, content y, style s) for training our model. In each case, x and y share content but not style, and x and s share style but not content.

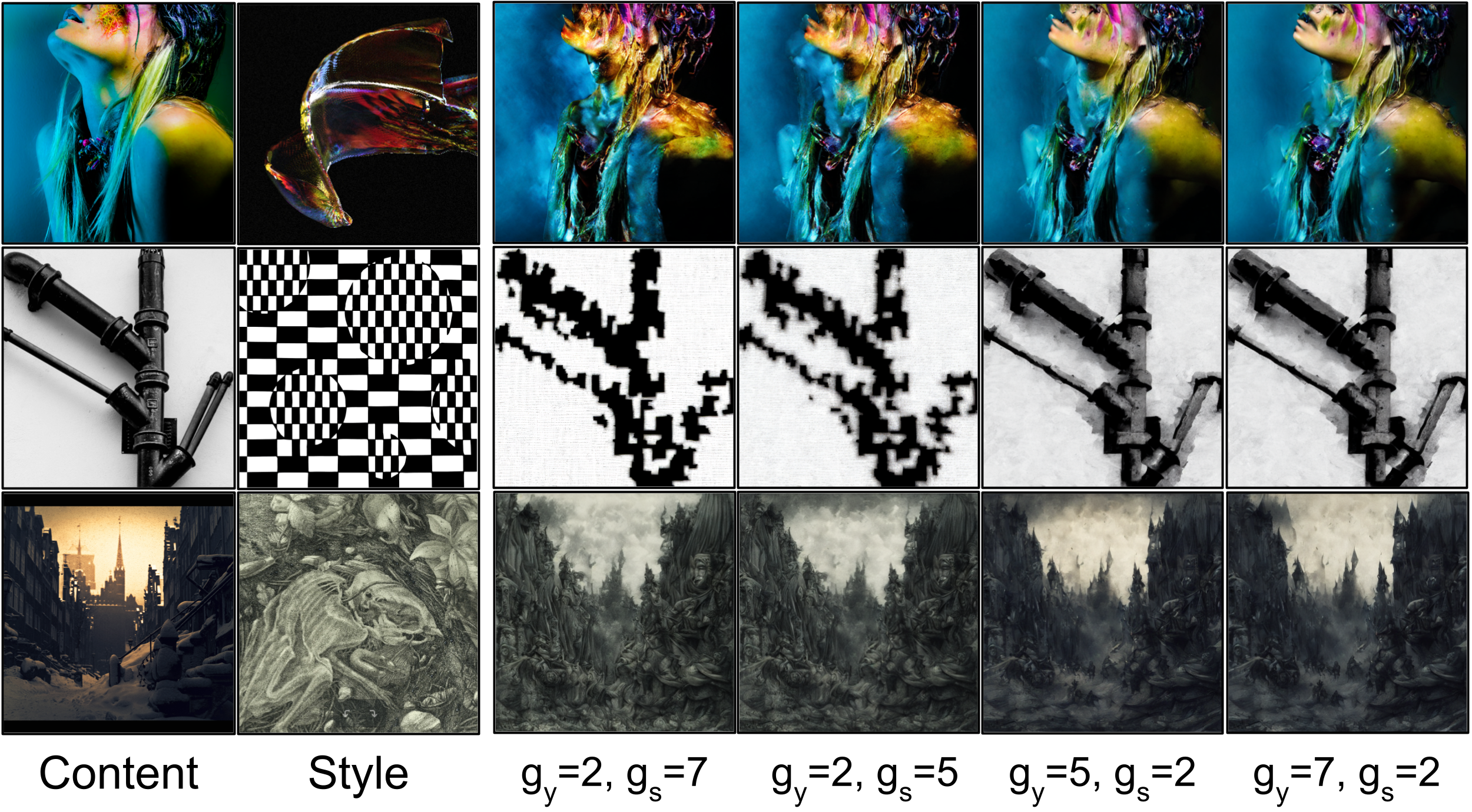

Controllability Experiments

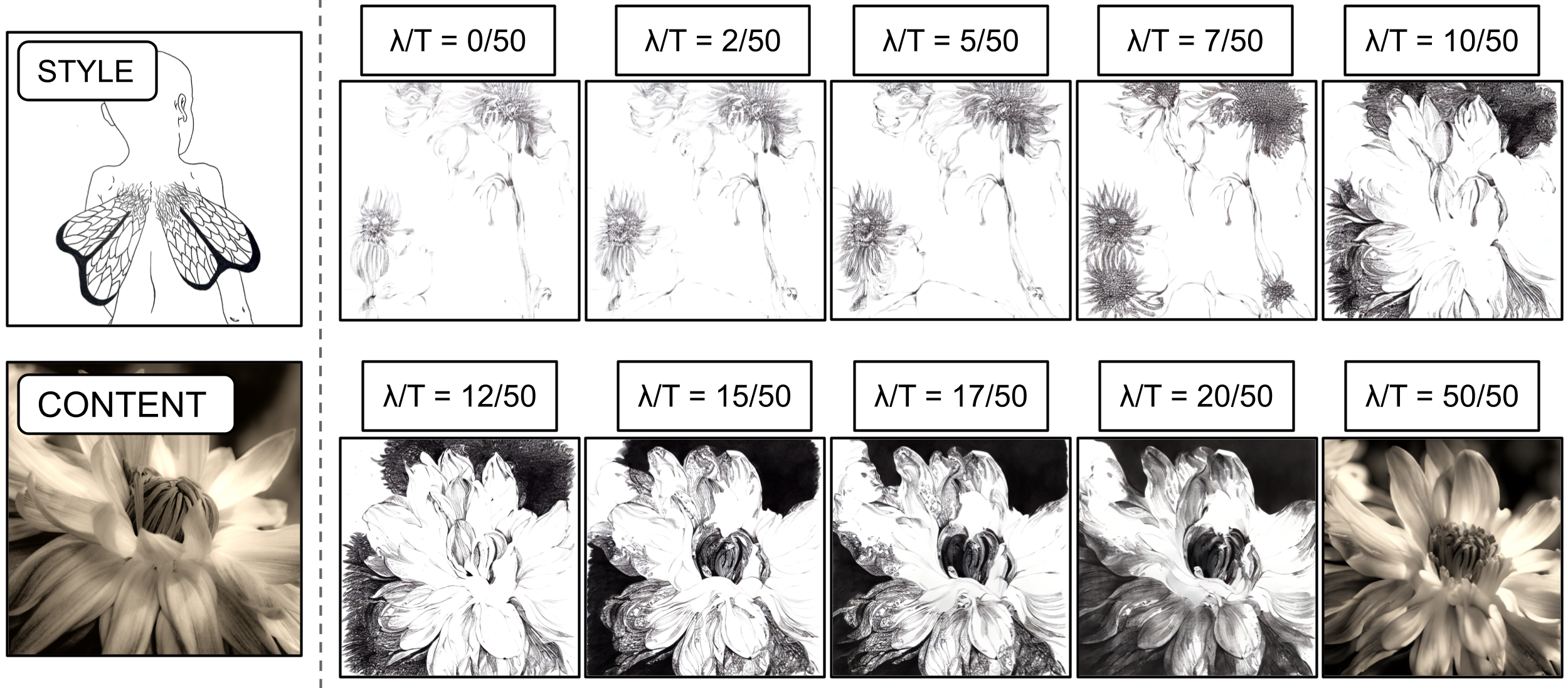

We introduce several parameters at sampling time for enhancing user controllability in the way each input is used.

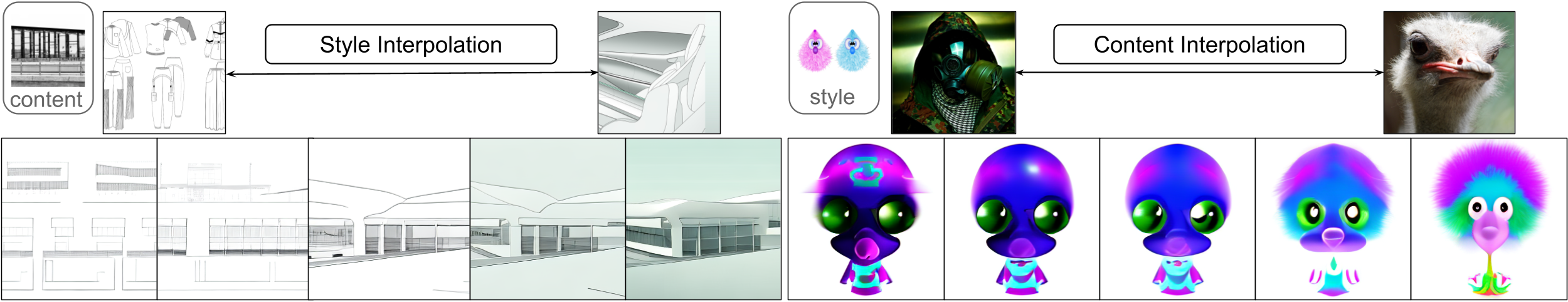

Application: Content/Style Interpolation

Due to the metric properties of the parametric style encoder, PARASOL is able to generate images based on an interpolation of several styles.